This is an opinion editorial by Aleksandar Svetski, author of “The UnCommunist Manifesto” and founder of the Bitcoin-focused language model Spirit of Satoshi.

Language models are all the rage, and many people are just taking foundation models (most often ChatGPT or something similar) and then connecting them to a vector database so that when people ask their “model” a question, it responds to the answer with context from this vector database.

What is a vector database? I’ll explain that in more detail in a future essay, but a simple way to understand it is as a collection of information stored as chunks of data, that a language model can query and use to produce better responses. Imagine “The Bitcoin Standard,” split into paragraphs, and stored in this vector database. You ask this new “model” a question about the history of money. The underlying model will actually query the database, select the most relevant piece of context (some paragraph from “The Bitcoin Standard”) and then feed it into the prompt of the underlying model (in many cases, ChatGPT). The model should then respond with a more relevant answer. This is cool, and works OK in some cases, but doesn’t solve the underlying issues of mainstream noise and bias that the underlying models are subject to during their training.

This is what we’re trying to do at Spirit of Satoshi. We have built a model like what’s described above about six months ago, which you can go try out here. You’ll notice it’s not bad with some answers but it cannot hold a conversation, and it performs really poorly when it comes to shitcoinery and things that a real Bitcoiner would know.

This is why we’ve changed our approach and are building a full language model from scratch. In this essay, I will talk a little bit about that, to give you an idea of what it entails.

A More ‘Based’ Bitcoin Language Model

The mission to build a more “based” language model continues. It’s proven to be more involved than even I had thought, not from a “technically complicated” standpoint, but more from a “damn this is tedious” standpoint.

It’s all about data. And not the quantity of data, but the quality and format of data. You’ve probably heard nerds talk about this, and you don’t really appreciate it until you actually begin feeding the stuff to a model, and you get a result… which wasn’t necessarily what you wanted.

The data pipeline is where all the work is. You have to collect and curate the data, then you have to extract it. Then you have to programmatically clean it (it’s impossible to do a first-run clean manually).

Then you take this programmatically-cleaned, raw data and you have to transform it into multiple data formats (think of question-and-answer pairs, or semantically-coherent chunks and paragraphs). This you also need to do programmatically, if you’re dealing with loads of data — which is the case for a language model. Funny enough, other language models are actually good for this task! You use language models to build new language models.

Then, because there will likely be loads of junk left in there, and irrelevant garbage generated by whatever language model you used to programmatically transform the data, you need to do a more intense clean.

This is where you need to get human help, because at this stage, it seems humans are still the only creatures on the planet with the agency necessary to differentiate and determine quality. Algorithms can kind of do this, but not so well with language just yet — especially in more nuanced, comparative contexts — which is where Bitcoin squarely sits.

In any case, doing this at scale is incredibly hard unless you have an army of people to help you. That army of people can be mercenaries paid for by someone, like OpenAI which has more money than God, or they can be missionaries, which is what the Bitcoin community generally is (we’re very lucky and grateful for this at Spirit of Satoshi). Individuals go through data items and one by one select whether to keep, discard or modify the data.

Once the data goes through this process, you end up with something clean on the other end. Of course, there are more intricacies involved here. For example, you need to ensure that bad actors who are trying to botch your clean-up process are weeded out, or their inputs are discarded. You can do that in a series of ways, and everyone does it a bit differently. You can screen people on the way in, you can build some sort of internal clean-up consensus model so that thresholds need to be met for data items to be kept or discarded, etc. At Spirit of Satoshi, we’re doing a blend of both, and I guess we shall see how effective it is in the coming months.

Now… once you’ve got this beautiful clean data out the end of this “pipeline,” you then need to format it once more in preparation for “training” a model.

This final stage is where the graphical processing units (GPUs) come into play, and is really what most people think about when they hear about building language models. All the other stuff that I covered is generally ignored.

This home-stretch stage involves training a series of models, and playing with the parameters, the data blends, the quantum of data, the model types, etc. This can quickly get expensive, so you best have some damn good data and you’re better off starting with smaller models and building your way up.

It’s all experimental, and what you get out the other end is… a result…

It’s incredible the things we humans conjure up. Anyway…

At Spirit of Satoshi, our result is still in the making, and we are working on it in a couple of ways:

- We ask volunteers to help us collect and curate the most relevant data for the model. We’re doing that at The Nakamoto Repository. This is a repository of every book, essay, article, blog, YouTube video and podcast about and related to Bitcoin, and peripherals like the works of Friedrich Nietzsche, Oswald Spengler, Jordan Peterson, Hans-Hermann Hoppe, Murray Rothbard, Carl Jung, the Bible, etc.

You can search for anything there and access the URL, text file or PDF. If a volunteer can’t find something, or feel it needs to be included, they can “add” a record. If they add junk though, it won’t be accepted. Ideally, volunteers will submit the data as a .txt file along with a link.

- Community members can also actually help us clean the data, and earn sats. Remember that missionary stage I mentioned? Well this is it. We’re rolling out a whole toolbox as part of this, and participants will be able to play “FUD buster” and “rank replies” and all sorts of other things. For now, it’s like a Tinder-esque keep/discard/comment experience on data interface to clean up what’s in the pipeline.

This is a way for people who have spent years learning about and understanding Bitcoin to transform that “work” into sats. No, they’re not going to get rich, but they can help contribute toward something they might deem a worthy project, and earn something along the way.

Probability Programs, Not AI

In a few previous essays, I’ve argued that “artificial intelligence” is a flawed term, because while it is artificial, it’s not intelligent — and furthermore, the fear porn surrounding artificial general intelligence (AGI) has been completely unfounded because there is literally no risk of this thing becoming spontaneously sentient and killing us all. A few months on and I am even more convinced of this.

I think back to John Carter’s excellent article “I’m Already Bored With Generative AI” and he was so spot on.

There’s really nothing magical, or intelligent for that matter, about any of this AI stuff. The more we play with it, the more time we spend actually building our own, the more we realize there’s no sentience here. There’s no actual thinking or reasoning happening. There is no agency. These are just “probability programs.”

The way they are labeled, and the terms thrown around, whether it’s “AI” or “machine learning” or “agents,” is actually where most of the fear, uncertainty and doubt lies.

These labels are just an attempt to describe a set of processes, that are really unlike anything that a human does. The problem with language is that we immediately begin to anthropomorphize it in order to make sense of it. And in the process of doing that, it is the audience or the listener who breathes life into Frankenstein’s monster.

AI has no life other than what you give it with your own imagination. This is much the same with any other imaginary, eschatological threat.

(Insert examples around climate change, aliens or whatever else is going on on Twitter/X.)

This is, of course, very useful for globo-homo bureaucrats who want to use any such tool/program/machine for their own purposes. They’ve been spinning stories and narratives since before they could walk, and this is just the latest one to spin. And because most people are lemmings and will believe whatever someone who sounds a few IQ points smarter than them has to say, they will use that to their advantage.

I remember talking about regulation coming down the pipeline. I noticed that last week or the week before, there are now “official guidelines” or something of the sort for generative AI — courtesy of our bureaucratic overlords. What this means, nobody really knows. It’s masked in the same nonsensical language that all of their other regulations are. The net result being, once again, “We write the rules, we get to use the tools the way we want, you must use it the way we tell you, or else.”

The most ridiculous part is that a bunch of people cheered about this, thinking that they’re somehow safer from the imaginary monster that never was. In fact, they’ll probably credit these agencies with “saving us from AGI” because it never materialized.

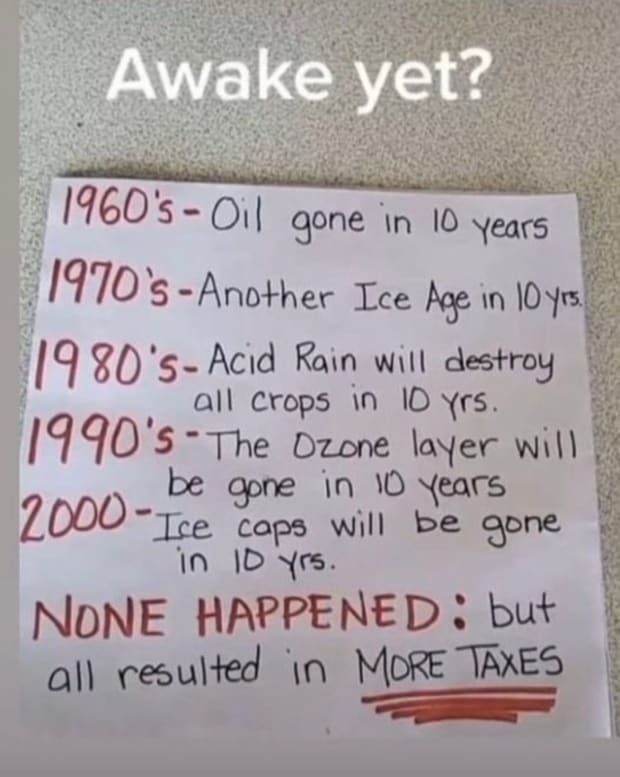

It reminds me of this:

When I posted the above picture on Twitter, the amount of idiots who responded with genuine belief that the avoidance of these catastrophes was a result of increased bureaucratic intervention told me all that I needed to know about the level of collective intelligence on that platform.

Nevertheless, here we are. Once again. Same story, new characters.

Alas — there’s really little we can do about that, other than to focus on our own stuff. We’ll continue to do what we set out to do.

I’ve become less excited about “GenAI” in general, and I get the sense that a lot of the hype is wearing off as people’s attention moves onto aliens and politics again. I’m also less convinced that there is something substantially transformative here — at least to the degree that I thought six months ago. Perhaps I’ll be proven wrong. I do think these tools have latent, untapped potential, but it’s just that: latent.

I think we have to be more realistic about what they are (instead of artificial intelligence, it’s better to call them “probability programs”) and that might actually mean we spend less time and energy on pipe dreams and focus more on building useful applications. In that sense, I do remain curious and cautiously optimistic that something does materialize, and believe that somewhere in the nexus of Bitcoin, probability programs and protocols such as Nostr, something very useful will emerge.

I am hopeful that we can take part in that, and I’d love for you also to take part in it if you’re interested. To that end, I shall leave you all to your day, and hope this was a useful 10-minute insight into what it takes to build a language model.

This is a guest post by Aleksander Svetski. Opinions expressed are entirely their own and do not necessarily reflect those of BTC Inc or Bitcoin Magazine.

Source: bitcoinmagazine.com